How to build a custom object detector using Yolo

I originally wrote this article for Morning Cup of Coding. Morning Cup of Coding is a newsletter for software engineers to be up to date with and learn something new from all fields of programming. Curated by Pek and delivered every day, it is designed to be your morning reading list. Learn more.

Introduction

You Only Look Once (Yolo) is a state-of-the-art, real-time detection system, done by Joseph Redmon and Ali Farhadi. In spite of the fact that it isn't the most accurate algorithm, it is the fastest model for object detection with a reasonable little accuracy compared to others models. It has till now three models Yolo v1, Yolo v2 (YOLO9000), and recently Yolo v3, each version has improvements compared to the previous models.

In this post, we will use transfer learning from a pre-trained tiny Yolo v2 model to train a custom dataset. For this case, I collected a dataset for my Rubik’s Cube to create a custom object detector to detect it. Tiny Yolo model is much faster but less accurate than the normal Yolo v2 model.

Requirements:

- Java Development Kit (JDK), you can get it from here.

- Netbeans or any Java IDEs, you can get it from here.

- Deeplearning4j, Open-Source, Distributed, Deep Learning Library for the JVM

- LabelImg, An application to annotate the objects in images.

- Source Code, you can get it from https://github.com/tahaemara/yolo-custom-object-detector.

- If you don't know how to run a maven project with netbeans, see this video https://www.youtube.com/watch?v=CDkdy3BwIqs.

- Webcam, I used ELP Sony IMX322 Sensor Mini Usb Camera

.

Dataset Collection and Annotating

I collected a dataset for my Rubik's Cube through my webcam with the size of (416x416) which equals to the size of the input image to the tiny Yolo model. I collected it in different positions with different poses and scales to provided a reasonable accuracy. The next step is to annotate the dataset to define the location (Bounding box) of the object (Rubik's cube) in each image. I hand-labeled them manually with LabelImg, it is really a tedious task. LabelImg is an application to annotate objects in a given image. It is written in Python and uses Qt for the graphical interface, it has binaries for Windows and Linux.

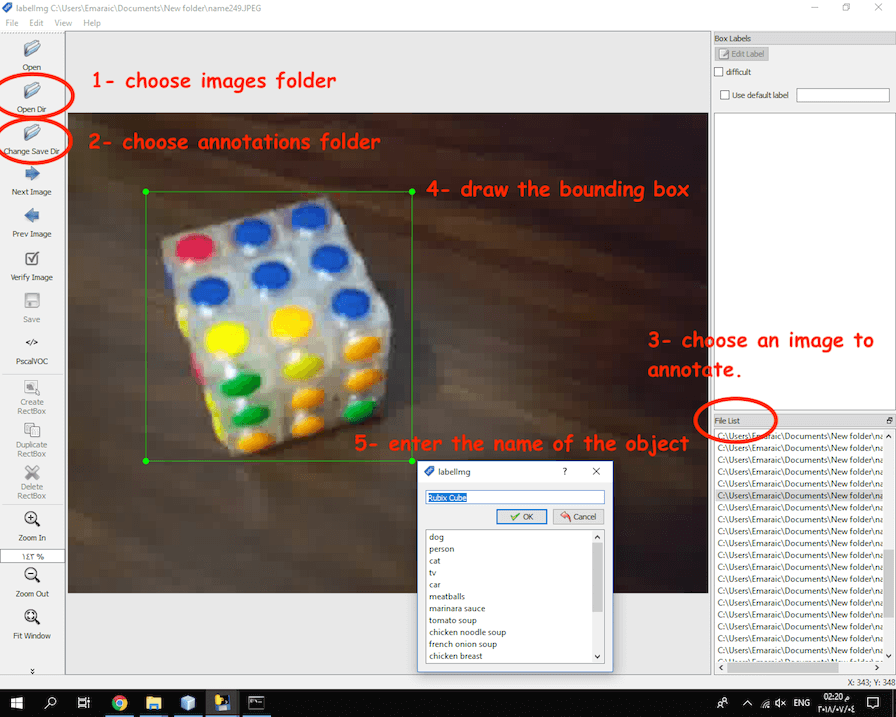

The steps to annotate Rubik's cubes in images using LabelImg:

- Create a folder contains images files and name it "images".

- Create a folder contains annotations files and name it "annotations".

- Open LabelImg application.

- Click on “Open Dir” and then choose the Images folder.

- Click on “Change Save Dir” and then choose the annotations folder.

- You will find that all images are listed in the File List panel.

- Click on the Image you want to annotate.

- Click the letter “W” from your keyboard to draw the rectangle on the desired image object, type the name of the object on the popped up window.

- Click ”CTRL+S” to save the annotation to the XML file in the annotations folder.

- Repeat steps 8 to 10 till you complete annotating all the images.

The training process

The main idea behind making custom object detection or even custom classification model is Transfer Learning which means reusing an efficient pre-trained model such as VGG, Inception, or Resnet as a starting point in another task. In this case, we remove the classification layer from the old model (a pre-trained Tiny Yolo v2) and adding our new classifier and then fine tune our new classifier on our custom dataset.

In the next table, I briefly described every class in the repository of this project

| Class Name | Its Function |

| YoloTrainer | Training Yolo with our custom dataset |

| RubixDetector | Real-time rubik's cube detector, it reads a stream of frames from the webcam the then detects the rubik's cube in each one. |

| YoloModel | Loading the trained model by the class YoloTrainer and make the detection given an image. |

| NonMaxSuppression | Implementation of the non-maximum suppression algorithm to cope the problem of detecting the same object multiple times by the yolo algorithm. |

The YoloTrainer class is responsible for training the model on our dataset. In the next steps in will explain each portion of the code briefly.

in this portion of code, we define parameters needed for the yolo model such as input image dimensions, number of grid cells, no object confidence parameter, loss function coefficient for position and size/scale components, anchor boxes, and number of classes, and parameters needed for learning such as number of epochs, batch size, and learning rate and path to the parent folder which contains images folder and annotations folder.

private static final int INPUT_WIDTH = 416;

private static final int INPUT_HEIGHT = 416;

private static final int CHANNELS = 3;

private static final int GRID_WIDTH = 13;

private static final int GRID_HEIGHT = 13;

private static final int CLASSES_NUMBER = 1;

private static final int BOXES_NUMBER = 5;

private static final double[][] PRIOR_BOXES = {{1.5, 1.5}, {2, 2}, {3,3}, {3.5, 8}, {4, 9}};//anchors boxes

private static final int BATCH_SIZE = 4;

private static final int EPOCHS = 50;

private static final double LEARNIGN_RATE = 0.0001;

private static final int SEED = 1234;

/*parent Dataset folder "DATA_DIR" contains two subfolder "images" and "annotations" */

private static final String DATA_DIR = "C:\\Users\\Emaraic\\Documents\\Dataset";

/* Yolo loss function prameters for more info

https://stats.stackexchange.com/questions/287486/yolo-loss-function-explanation*/

private static final double LAMDBA_COORD = 1.0;

private static final double LAMDBA_NO_OBJECT = 0.5;

in this portion of code, we define a web interface for monitoring the training process just like tensorboard for tensorflow, it will run on port 9000 on your localhost, just open your browser and go to http://localhost:9000 to monitor the training process and get some useful information about your network such as loss score and number of parameters.

//it starts at http://localhost:9000

UIServer uiServer = UIServer.getInstance();

//Configure where the network information (gradients, score vs. time etc) is to be stored. Here: store in memory.

StatsStorage statsStorage = new InMemoryStatsStorage();

//Attach the StatsStorage instance to the UI: this allows the contents of the StatsStorage to be visualized

uiServer.attach(statsStorage);

in this portion of code, we load the dataset and check that each image file has a correspondent annotation xml file -this is done by RandomPathFilter-. After that, we split the dataset to training set and testing set with a ratio 0.8/0.2 after that, we use the class ObjectDetectionRecordReader as an image record reader for the training process such that each record contains the input image and the correspondent output defined by xml annotation file and Yolo algorithm output format. After that we scale image pixels from the range 0 -> 255 to the range 0->1. Finally, we make an iterator to training set and testing set.

RandomPathFilter pathFilter = new RandomPathFilter(rng) {

@Override

protected boolean accept(String name) {

name = name.replace("/images/", "/annotations/").replace(".jpg", ".xml");

//System.out.println("Name " + name);

try {

return new File(new URI(name)).exists();

} catch (URISyntaxException ex) {

throw new RuntimeException(ex);

}

}

};

InputSplit[] data = new FileSplit(imageDir, NativeImageLoader.ALLOWED_FORMATS, rng).sample(pathFilter, .8, 0.2);

InputSplit trainData = data[0];

InputSplit testData = data[1];

ObjectDetectionRecordReader recordReaderTrain = new ObjectDetectionRecordReader(INPUT_HEIGHT, INPUT_WIDTH, CHANNELS,

GRID_HEIGHT, GRID_WIDTH, new VocLabelProvider(DATA_DIR));

recordReaderTrain.initialize(trainData);

ObjectDetectionRecordReader recordReaderTest = new ObjectDetectionRecordReader(INPUT_HEIGHT, INPUT_WIDTH, CHANNELS,

GRID_HEIGHT, GRID_WIDTH, new VocLabelProvider(DATA_DIR));

recordReaderTest.initialize(testData);

RecordReaderDataSetIterator train = new RecordReaderDataSetIterator(recordReaderTrain, BATCH_SIZE, 1, 1, true);

train.setPreProcessor(new ImagePreProcessingScaler(0, 1));

RecordReaderDataSetIterator test = new RecordReaderDataSetIterator(recordReaderTest, 1, 1, 1, true);

test.setPreProcessor(new ImagePreProcessingScaler(0, 1));in this portion of code, we load the pre-trained Tiny-Yolo model and then we define the parameters needed for training and fine-tuning process. After that we construct our computation graph from the pre-trained model after removing the last convolution layer (conv2d_9) which output shape is (13x13x5x(5+20))=(13x13x125) (gridCellHeight x gridCellWidth x numberAnchorBoxes x (5 + numberClasses)) and replace it with a convolution layer with the new shape (13x13x5x(5+1)). After that, the model is saved to be used in the detector app. You may notice that I did not implement any way to measure the accuracy as mIOU of this model, I keep it to be implemented by anyone. I just visualized the model accuracy on the testing data without computing mIOU.

ComputationGraph pretrained = (ComputationGraph) TinyYOLO.builder().build().initPretrained();

INDArray priors = Nd4j.create(PRIOR_BOXES);

FineTuneConfiguration fineTuneConf = new FineTuneConfiguration.Builder()

.seed(SEED)

.optimizationAlgo(OptimizationAlgorithm.STOCHASTIC_GRADIENT_DESCENT)

.gradientNormalization(GradientNormalization.RenormalizeL2PerLayer)

.gradientNormalizationThreshold(1.0)

.updater(new RmsProp(LEARNIGN_RATE))

.activation(Activation.IDENTITY).miniBatch(true)

.trainingWorkspaceMode(WorkspaceMode.ENABLED)

.build();

ComputationGraph model = new TransferLearning.GraphBuilder(pretrained)

.fineTuneConfiguration(fineTuneConf)

.setInputTypes(InputType.convolutional(INPUT_HEIGHT, INPUT_WIDTH, CHANNELS))

.removeVertexKeepConnections("conv2d_9")

.addLayer("convolution2d_9",

new ConvolutionLayer.Builder(1, 1)

.nIn(1024)

.nOut(BOXES_NUMBER * (5 + CLASSES_NUMBER))

.stride(1, 1)

.convolutionMode(ConvolutionMode.Same)

.weightInit(WeightInit.UNIFORM)

.hasBias(false)

.activation(Activation.IDENTITY)

.build(), "leaky_re_lu_8")

.addLayer("outputs",

new Yolo2OutputLayer.Builder()

.lambbaNoObj(LAMDBA_NO_OBJECT)

.lambdaCoord(LAMDBA_COORD)

.boundingBoxPriors(priors)

.build(), "convolution2d_9")

.setOutputs("outputs")

.build();

log.info("\n Model Summary \n" + model.summary());

log.info("Train model...");

//model.setListeners(new ScoreIterationListener(1));//print score after each iteration on stout

model.setListeners(new StatsListener(statsStorage));// visit http://localhost:9000 to track the training process

for (int i = 0; i < EPOCHS; i++) {

train.reset();

while (train.hasNext()) {

model.fit(train.next());

}

log.info("*** Completed epoch {} ***", i);

}

log.info("*** Saving Model ***");

ModelSerializer.writeModel(model, "model.data", true);

log.info("*** Training Done ***");Rubik's detector app

The class RubixDetector as we described in Table 1, reads a stream of frames from the webcam and resizes each frame to fit the input size for the yolo model (416x416) and then it sends the image to the method detectRubixCube in the class YoloModel which detects the rubik's cube in the image and then applies the non-max suppression algorithm by the class NonMaxSuppression to the output of the model. Finally, it draws the result boxes to the input image.

YoloModel detector = new YoloModel();

final AtomicReference capture = new AtomicReference<>(new opencv_videoio.VideoCapture());

capture.get().set(CV_CAP_PROP_FRAME_WIDTH, 1280);

capture.get().set(CV_CAP_PROP_FRAME_HEIGHT, 720);

if (!capture.get().open(0)) {

log.error("Can not open the cam !!!");

}

opencv_core.Mat colorimg = new opencv_core.Mat();

CanvasFrame mainframe = new CanvasFrame("Real-time Rubik's Cube Detector - Emaraic", CanvasFrame.getDefaultGamma() / 2.2);

mainframe.setDefaultCloseOperation(javax.swing.JFrame.EXIT_ON_CLOSE);

mainframe.setCanvasSize(600, 600);

mainframe.setLocationRelativeTo(null);

mainframe.setVisible(true);

while (true) {

while (capture.get().read(colorimg) && mainframe.isVisible()) {

long st = System.currentTimeMillis();

opencv_imgproc.resize(colorimg, colorimg, new opencv_core.Size(IMAGE_INPUT_W, IMAGE_INPUT_H));

detector.detectRubixCube(colorimg, .4);

double per = (System.currentTimeMillis() - st) / 1000.0;

log.info("It takes " + per + "Seconds to make detection");

putText(colorimg, "Detection Time : " + per + " ms", new opencv_core.Point(10, 25), 2,.9, opencv_core.Scalar.YELLOW);

mainframe.showImage(converter.convert(colorimg));

try {

Thread.sleep(20);

} catch (InterruptedException ex) {

log.error(ex.getMessage());

}

}

}

References

- 'YOLO9000: Better, Faster, Stronger' by Joseph Redmon and Ali Farhadi, Available here.

- Deeplearning4j HouseNumberDetection example.